Intune hacking: when is a "wipe" not a wipe

If you’re reading this blog, the chances are that you use Microsoft Intune for mobile device management, or you work with Microsoft Intune in some way. (Or you want to break a Microsoft Intune deployment for some reason.)

Intune is used for the provisioning and set up of endpoint devices for remote users, incorporating software installation, component configuration and other factors so that an employee can be provided with a brand new device which is setup and configured in line with company specifications and policies. Using Intune allows you to achieve a consistent baseline for your workstation builds, manage these in detail remotely and enforce stringent conditional access policies.

The flexibility and utility of remote device management using Intune is a real enabler of fully-remote workforces and zero-trust environments. There’s a downside to this flexibility which results in a potential compromise of overall security.

For various reasons that I will go into, when the threat you are countering is a malicious insider or other adversary who has physical access to the laptop and a user account, privilege escalation becomes more viable and there are some intricacies to the way Intune operates that need to be taken into account in risk mitigation decisions.

Key exposure by design

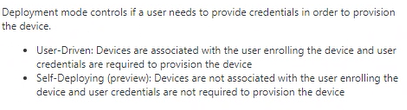

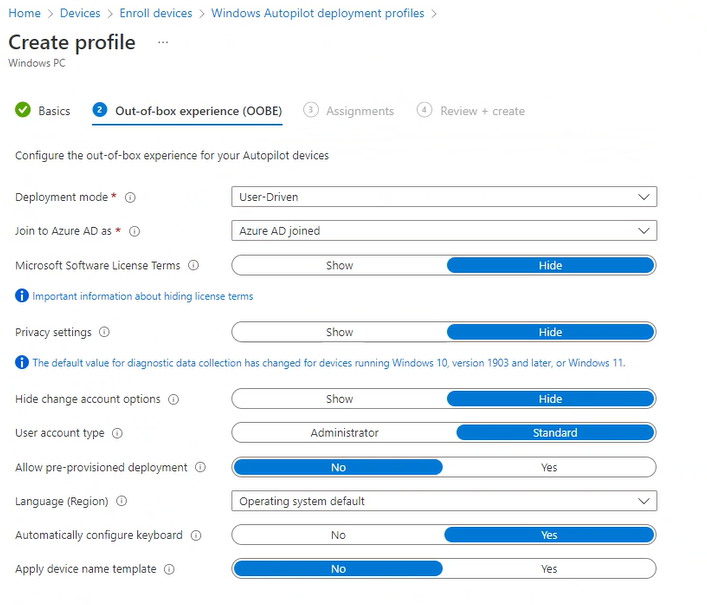

There are two different Out-of-box Experiences (OOBE) that Intune-managed devices can be provisioned using with Autopilot in the field; User-driven (the default and current mechanism) and self-deploying (currently in preview).

In user-driven mode, a device being provisioned requires association with an existing user account for the organisation. When the user receives their new device, their experience is:

- Unbox the device and turn it on

- Select language, keyboard and locale settings

- Connect to the network

- Log in using their existing user account for installation and configuration to continue

The device will then join the organisation, enrol in Intune and apply the organisation configuration settings in preparation for first use.

Self-deploying mode differs – all the user need do is set location-specific settings and connect to a network. The device configuration occurs before a user ever logs on to the device. Self-deploying mode is currently in public preview, so user-driven mode is more commonly adopted.

Segueing slightly into core controls for a moment: BitLocker encryption of drives is a key control both in corporate environments and for individuals. A BitLocker encrypted hard drive is protected from unauthorised access and tampering; to access a BitLocker hard drive, you need to provide a key. If a laptop with a BitLocker encrypted drive is stolen, you can be reasonably sure that the thief won’t be able to alter the contents of the hard drive without being given the key, and that they won’t be able to remove and read sensitive data from another system.

In the threat model of a lost or stolen device, BitLocker is extremely valuable. If an adversary has possession of BitLocker keys, and physical access to the drive, they are in a position to have full read/write access to the device, and in any operating system, this level of access will always allow an adversary to escalate their privileges to take complete control of a device.

If you need to account for the insider threat within your remote deployments (and some organisations will need to consider insider threat regularly as a specific and regular threat), user-driven deployment mode may expose you to risks you haven’t considered.

![]()

When an endpoint device is provisioned via the user-driven OOBE, the Primary User associated with the device “has access to self-service actions”, as the management portal tells you. In practice, this means that the Primary User is able to access the BitLocker recovery key for the system drive of the device via their Microsoft 365 account Devices settings page:

A user with malicious intent can access the BitLocker recovery key for their device easily, and can use this in various ways to subvert controls you have in place and escalate their privileges on the endpoint you have issued them. For example, the user can boot into recovery mode, provide the recovery key and then do the good ol’ tried-and-tested Sticky Keys trick to give themselves an elevated shell whenever they want it, copy off SAM and SYSTEM hives to extract local credentials or tamper with controls such as AV or EDR.

Now, this is a “by design” feature, but many historic risk mitigation models to protect against malicious insiders were assessed at a time when BitLocker keys would have been set at installation time by administrators, stored securely and – importantly – not exposed to remote, untrusted users, except when administrators were aware of this for a specific need. The exposure of keys like this directly to end users who might be malicious is quite a departure from the traditional control model, and therefore warrants additional consideration.

There is no logging of access to these keys: you can’t determine whether a user has accessed the recovery key for their device or not. You can’t alert on reading of these keys, so you’re unable to determine whether somebody is planning to access or use their recovery key.

There are workarounds you can put in place, but understanding the limitations of these within an Intune-managed environment is important.

To affect provisioning in user-driven mode, a Primary User has to be set for a device. Once a device has been provisioned, you can remove the Primary User from the device properties. This has the effect of removing access to the BitLocker keys from the user of the device. It means that (at least after initial setup) a user who develops malicious intent won’t have access to these keys to use that attack path. The downside, of course, is that for all your users without malicious intent, they won’t be able to perform a lot of recovery-type actions without intervention from your support teams, so there’s a trade-off immediately.

If you remove the Primary User from the device, and then wipe or reset the device, it will take the same Autopilot profile again (user-driven) and once more, because a Primary User is required, the BitLocker keys will be exposed to a user profile again. You could put a process in place that specifies the removal of the Primary User as soon as a device is provisioned, followed by rotation of the BitLocker keys for the device. This would mean the current keys would not have been exposed to the user, and mitigates a lot of the risk.

The key takeaway here is that additional process is needed around remote access provisioning of devices in order to protect device integrity from malicious users – and these processes are likely to be manual unless or until the Autopilot process is adapted to alter this situation. There is a risk here that needs to be assessed and managed, and full automation may not be a realisable goal at this stage.

How worried should we be?

If you truly have a malicious user with the technical knowledge to attack you at the point where you are provisioning device access, the truth is that they will likely find a way to attack you whether you have locked down your BitLocker keys or not.

It’s well-known that Windows Autopilot has a troubleshooting mode which is available in both OOBE experiences: Pressing Shift+F10 during provisioning will open up a command prompt with SYSTEM access that can be used by the recipient of the device to tamper with the system at setup time. It’s a much smaller window of opportunity than “at any time the device has a Primary User set”, but it’s still important to note. If you want to be able to rely on BitLocker to provide you with confidence in system integrity, however, preventing access to device encryption keys has to be part of your risk calculation.

Decommissioning and reprovisioning devices – persistence of compromise

The advice, when faced with a known-compromised device needing to be re-used, has been – for as long as we can remember – to reinstall from fresh from a known-good source. When provisioning happened in office environments, administrators would be able to take a known-compromised device and reinstall this entirely from scratch using good media. Now that organisations demand remote provisioning, this isn’t necessarily available as an option without physical retrieval of the provisioned device.

The change of operating paradigm means that there are risks to consider with device decommissioning and reprovisioning which might not be obvious at first glance.

When exploring Intune risk scenarios with our customers, we demonstrated the ability of a malicious users to escalate privileges both at provisioning time (via the Autopilot troubleshooting console) and later (via the exposure of BitLocker recovery keys). In both instances, we chose to use the Sticky Keys technique to demonstrate exploitation and facilitate replication of the issue. Of course, once the engagement was over this meant that devices provided to use for provisioning had binaries within SYSTEM32 which had been altered – namely the Sticky Keys executable had been overwritten with a copy of cmd.exe.

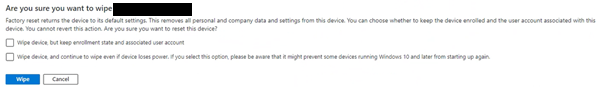

Our customer teams, followed their documented process for decommissioning and reprovisioning devices ready for other users – initiating a wipe of the device within the Intune portal. There are several different options within Intune for devices – wipe, retire, delete, Autopilot reset and Fresh start.

The documentation from Microsoft says a wipe (not retaining enrolment state and user settings):

Wipes all user accounts, data, MDM policies, and settings. Resets the operating system to its default state and settings.

Also in the documentation:

A wipe is useful for resetting a device before you give the device to a new user, or when the device has been lost or stolen. Be careful about selecting Wipe. Data on the device cannot be recovered. This level of device wipe follows a standard file delete process, rather than a low-level delete.

Wipe, on the surface, seems the natural option to choose when a device needs to be decommissioned and reprovisioned to another user – but a wipe might not be as comprehensive as you think. In particular a wipe of a provisioned machine does not restore Windows binaries to a known-good baseline by comparing file hashes. What this meant for our customers was that their wiped and re-provisioned devices had not had the Sticky Keys hack reversed, and although they were “fresh” for a new user to operate, they were still in a compromised state and able to be used in an escalation of privileges.

A wipe does remove arbitrary files which are added to SYSTEM32, but it won’t remove files that are expected to be there – such as the sethc.exe binary that has been overwritten with a copy of cmd.exe.

The only way to be sure that a remote device has been reprovisioned entirely is for the user to initiate a system reset from the device, requesting the removal of everything and a cloud download and reinstall of Windows. The kicker, for administrators managing remote laptop devices, is that you need the remote user to cooperate in starting this process. If your remote user is the malicious insider you’re worried about, you can’t rely on their cooperation at all.

If you physically recover an asset from a suspect user, reinstalling from known-good media should still be your ideal solution prior to reprovisioning for another user. If you rely on a wipe only – because this is the most operationally convenient solution - there’s a chance of malicious persistence on your endpoints that you may not have fully appreciated.

Improve your security

Our experienced team will identify and address your most critical information security concerns.